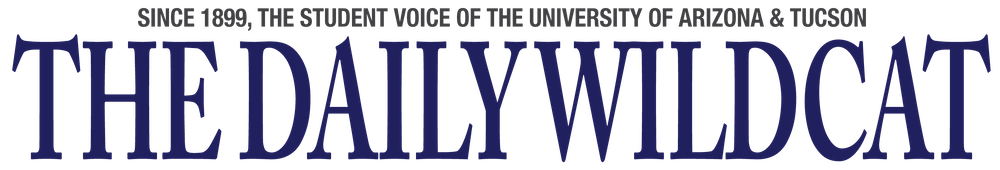

Marcia Rieke is an infrared astronomer and the principal investigator who designed the near-infrared camera (NIRcam) for the James Webb space telescope. The Daily Wildcat sat down with Rieke to discuss her work in the field of infrared astronomy and NIRcam specifically.

Daily Wildcat: Okay, so, first of all, introduce yourself.

Marcia Rieke: I’m Marcia Rieke. I’m a professor here on campus, and I’m the principal investigator for the near-infrared camera on the Webb telescope and that means that I’ve been the leader of the team that designed and developed and tested the camera, and we get some observing time too at the end.

DW: Very cool! How did you get started in the field of astronomy and infrared astronomy specifically?

MR: Well, I was interested in astronomy from a relatively young age. I think I read too many science fiction books and wanted to go see these other planets which now we know exist. I got interested in infrared when I was more or less a senior and in college because it was a kind of astronomy that was brand new at the time, and it seemed like a really exciting field to jump into because I could get into it near the beginning, so to speak.

DW: Tell me a little bit about what you do in general as an infrared astronomer.

MR: Well, right now I’m of course working on this camera that’s now on Webb and so part of what I do right now is help my team analyze the test data that we’re taking to check out the telescope, make certain everything is working right. So we get data that’s sent down, images that are taken through the telescope and then they’re sent down to the ground and then we grab them over the internet, and we analyze them to look for various features and signal strengths and so on.

DW: How did you get started with the James Webb telescope specifically?

MR: So back in, would you believe 1998, NASA headquarters issued what they call an announcement of opportunity, and this was an opportunity to participate in brainstorming what the Webb mission might look like, what its science priorities should be and I sent in a reply to that announcement of opportunity and got selected to be on what they call the ad hoc science working group. We discussed and debated what the key science plans should be and, therefore, what kind of cameras and spectrometers would be needed on the telescope.

DW: What exactly does the NIRcam do as part of the telescope?

MR: It takes pictures mostly, and it can take some spectra, but the main thing is taking pictures and those pictures can either be of an interesting science target, which is of course what will happen most of the time later on, or right now it’s taking pictures of stars that are analyzed to help line up the eighteen mirror segments that are called wavefront sensing (complicated name). We’re taking kind of engineering data right now, and the images are, in many ways, like what you get from a digital camera, except that they’re at a longer wavelength, and obviously have to get sent back to the ground from this big telescope. But, the basic format is like what you get from a digital camera.

DW: What do you think we will learn from the photos taken by the NIRcam?

MR: So I, I hope that the science pictures will lead us to something that we’re not guessing right now that will be some surprise discovery but that’s nice to say but it’s a little bit hard to describe. But what we know that we can do will be things by like finding the first galaxies to form after the big bang. Hubble has given us some clues but hasn’t been able, because it doesn’t have much infrared capability, can’t get all the way back to the earliest times. And using the spectroscopic capability in NIRcam – meaning the ability to spread the light out into colors – we hope to analyze the atmospheres of some exoplanets to see what they’re made of. Now in our own science, other people may actually try to really do the hard thing, which is to measure the atmospheric composition of an Earth-like planet. My team didn’t have the courage to spend that much observing time on one target, but I’m sure some people will see if they can find the kinds of molecules that we associate with our own atmosphere.

RELATED: Early-life risk factors that lead to poorer adult lung health

DW: Tell me more about how this device can “peer back in time and space.”

MR: Yeah, that’s what I mean by finding the first galaxies to form after the big bang, and the way that works is that light doesn’t travel infinitely rapidly – it actually has a speed of 186,000 miles per second, which is pretty darn fast, but if you imagine having to traverse almost the entire size of the universe, we, by knowing how far away something is, know how long the light has been traveling to us. It’s simple: you know distance and velocity give you a time, and so we know how far away these objects are, and so we know exactly what age we’re seeing them because so far Hubble is seeing … light from objects where the light has taken about 13.2 billion years to get to us. That means that the light we’re seeing now left those objects when they were only 500 million years old, after the big bang, and we’re going to push that back to maybe only 100 million years after the big bang, which may not sound like a big change in time but it’s a factor of five in time. It’s like the difference between, you know, Hubble could see a five-year-old but we’re hoping to see something much closer to the baby.

DW: What did the process of developing this device look like?

MR: So, that science working group kind of laid out broad brush what NIRcam was to be capable of. And then in 2001, NASA issued another announcement of opportunity to propose to be the group that would actually build NIRcam, and I formed a team with a number of people here at the university, a few others and we teamed with an aerospace company, Lockheed Martin, to propose a specific design for NIRcam.

We were fortunate to win that competition. We were selected in June of 2002 and then we started working with engineers at Lockheed to actually finalize the design of NIRcam. We did some of the construction here on campus for the actual infrared light sensing packages – sort of the light sensor chips, so to speak–and we tested those. We helped Lockheed test the assembled instrument and we gave them lots of advice along the way. Engineers are very good at following requirements, but sometimes the requirements don’t capture all the facets of our problem, and we would give them advice on exactly what was it we meant when we wrote the requirement.

Then, after they finished building it and we tested it, we had to test it again when it got delivered to Goddard Space Flight Center and it got put into a structure that holds the instruments on the back of the telescope. There was one more round of tests where the instruments were connected to the telescope and we checked out that light path worked properly. Now, after launch we’re participating in making certain that both the telescope and the instrument are working as we planned, so a lot of testing.

DW: What’s something that you think people don’t know that you find really fascinating or interesting about infrared astronomy?

MR: I think the man in the street doesn’t know much about what astronomers look at other than visible light because that’s what Hubble shows, and that there are other kinds of light I think people don’t completely appreciate and why it’s important to look at these different kinds of light. That’s something I’d like people to know, understand better, it’s not just infrared light that’s good to look at: there are radio astronomers, X-ray astronomers, there’s quite a bit more than just visible light.

DW: What are some of your other favorite projects that you’ve done in your field?

MR: I got my start in the field working here building cameras and spectrometers for ground-based telescopes and that was before infrared astronomy was mature enough to propose for space projects. Our original detector had a pixel and to make what you would think of as a map, or a photo, you had to step it across the sky very tedious, so you’d take an image, take a measurement, move the telescope, take a measurement and do that until you had a square array of values that would represent what the thing looked like. The first time we actually got a chip that was only 32 by 32 pixels, but you could take a picture with this tiny device, and it was like ‘geez I’ve died and gone to heaven, look at this, I can now get 1024 points at once, instead of one point’ and remarkably, NIRcam has a grand total of 40 megapixels, so it’s been huge progress in just my lifetime from one pixel to 40 megapixels. I think that’s pretty dramatic.

DW: Thank you very much!

Follow Sean Meixner on Twitter